Will Giant Companies Always Have a Monopoly on Top AI Models?

In my post on large language models (LLMs) last week, I argued that the most important question about LLMs is not the outcome of a race with China or when AI will reach human-level intelligence, but whether this striking new technology will be accessible to and serve the interests of ordinary people, or whether it will end up centrally controlled by a small number of highly capitalized companies or governments.

One of the biggest factors in determining which future we face is the question: how easy it is to access the resources needed to build and train a cutting-edge or “frontier” LLM? Inspired by this question, I did a bit of a dive into the training process for LLMs to try to assess the outlook for free LLMs. To explain what I found, I’ll need to look at the basics of how models are trained, as best I understand this fast-moving science from reading what experts in the field are saying.

A turnaround

As I mentioned in the prior post, things started off poorly. In 2022 and 2023, around the time OpenAI’s ChatGPT was released to the public, it looked like there was a real likelihood that this powerful new technology would be like nuclear power: centralized, complex, highly capital-intensive, secretive, and subject to strict security controls. The AI “base model” that was the engine driving the ChatGPT application was called GPT-3, which was succeeded by GPT-4 in 2023. Training these models on the vast amounts of data available on the internet and elsewhere was an enormously expensive undertaking that few organizations could afford. The GPT-4 model over $100 million to train, including the acquisition of 25,000 computer graphics cards and a bill.

And it looked as though this level of resources was just the start. The seemingly miraculous performance of ChatGPT was largely the product of simply taking past research and scaling it up. LLMs that worked very poorly suddenly worked much better simply by exponentially increasing the amount of computing power (“compute”) dedicated to their training. Even though the computations were relatively simple — involving predicting the next “token” (roughly, word or symbol) in a text — when those computations were repeated trillions of times, unexpectedly smart behaviors appeared through a process known as “emergence.”

Emergence

Emergence is a phenomenon in complexity science referring to the fact that large numbers of simple rules can upon repetition produce complex and surprising behaviors that don’t appear to be the predictable result of any characteristics evident in the rules. A classic example is the flocking behavior of birds. Computer programmers trying to recreate that behavior in a computer bird simulation could tie themselves in knots trying to manually direct V-formations and the beautiful merging and diverging of flocks, but it turns out that if each virtual bird is programmed with just a few simple rules (“don’t get too close to your neighbors, but steer toward their average heading and position”) a flock of simulated birds will behave in ways strikingly similar to the complex movements of real flocks. That complex behavior emerges out of the simple rules in ways that nobody could ever predict by looking at the rules.

There are over the precise meaning of “emergence” and the role it plays, but overall it seems to me that there’s no question that when AI systems are scaled up, they become capable of doing things that are surprising to people and far beyond anything they’ve been explicitly programmed to do. And that power is not to be underestimated; AI progress may not soar the way boosters predict — but neither should the technology be reductively dismissed as a mere “word prediction machine” or the like.

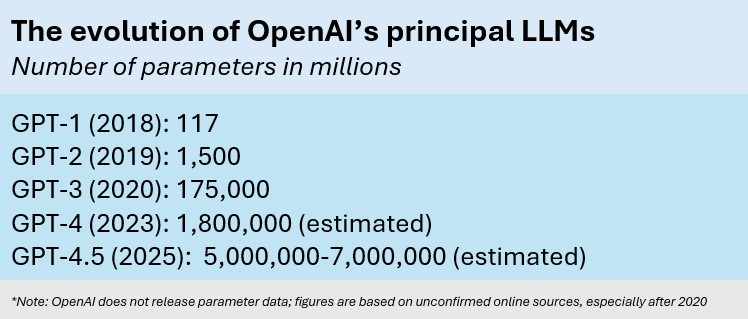

The original GPT-1 base model, released in 2018, had 117 million parameters — numbers that represent the strength of associations between different tokens, akin to synapses in the model’s “brain.” The next model, GPT-2, had over 12 times as many, and GPT-3 had 116 times more than that, powering the chatbot that burst into fame as ChatGPT. GPT-4 had an estimated ten times more and performed far better. Simply programming these models to predict the next word in a sequence led to emergent behaviors that seemed surprisingly (and deceptively) intelligent and human in some respects, and soared far beyond what one might expect from a system that, at root, is trained to simply predict the next word in a text.

In 2022 the lesson seemed clear: exponentially scaling up these models was the secret to success. At the rate things were progressing, it seemed plausible to many that with just a few more exponential leaps we might reach human-level intelligence — what is commonly if vaguely referred to as “artificial general intelligence,” or AGI. This fueled a “Manhattan Project” conception of LLM research as a geopolitical “race” toward a definitive goal: a sudden, secret breakthrough in reaching human or superhuman artificial intelligence. The winner of this race would obtain not a nuclear weapon but some sort of AI equivalent that would provide new levers for permanent dominance in business, the military, and the world. The implications of such a conception are bad for freedom: that research efforts should be concentrated and secretive, while cooperation and openness are foolish.

But a funny thing happened on the way to AGI: the benefits from scaling up the base models appear to have reached a state of significantly . For several years after GPT-4, for example, the AI world was eagerly awaiting, and OpenAI eagerly promoting, GPT-5, but its release was repeatedly delayed until, in late February 2025 the company finally released GPT-4.5 (suspected by to be an expectations-lowering rename of GPT-5). It was not dramatically higher-performing. “GPT 4.5 cost about 100x the compute of GPT-4 to train,” one expert , but “it is only slightly better on normal user metrics. Scaling as a product differentiator died in 2024.” Indeed, OpenAI’s competitors experienced a similar leveling off of progress in base-model training. The release of a model called GPT-5 in early August only this trend.

This was true even before the Chinese company DeepSeek made its enormous splash in December 2024 and January 2025, releasing models that achieved much more power at far lower training cost than previous models had been able to achieve. Some it to a company offering $50 smartphones more powerful than the latest $1,000 iPhone. This roiled stock valuations and was viewed largely through the lens of US-China geopolitical rivalries. The real significance of the DeepSeek innovations, however, was that it both clarified and accelerated the declining plausibility of the Manhattan Project model of LLM research.

The stages of training

As research continues, however, it is controversial and unclear to what extent the big science character of LLM training will continue to fade. The training of base models, where the returns to scale have apparently leveled off, at least for now, is only one step in creating LLMs. Meanwhile, other steps in the creation of finished models are being scaled up and absorbing more resources.

Overall, there are four basic stages in the creation of a model today, and they vary in what kinds of resources they require.

a. Data preparation

Access to data for training the base model is the first thing that any actor wanting to train an LLM will need. The data that will be used to train the model must be selected, gathered, and perhaps filtered. Typically this means enormous masses of raw textual data (what is often summarized as “the entire Internet,” though it can also include the texts of books, messages, social media posts, and other things that may not be online). Images and video are increasingly being used as well for so-called “multi-modal” models that aim to understand images as well as text.

It’s not just big companies that can access all this data; there are a of open data sets that are available for anyone to use. The most prominent, perhaps, is the nonprofit organization ’s dataset, which includes regular snapshots of the entire public web gathered since 2008. Other publicly available datasets include the texts of books, Wikipedia, computer code repositories, and research papers. Experts say the biggest LLM players like OpenAI, Google, and Anthropic, reportedly do their own web crawling instead of just using such databases, and may have more resources to curate and filter the vast oceans of data that are poured into LLM pre-training. It’s not clear how much of an edge such work gives them in the quality of an LLM end product.

One of the reasons that the benefits of scaling up base-model training may have levelled off is that the amount of training data used has not scaled up commensurately with the number of parameters in the newest models at the frontier of research, according to . The internet and other prominent data sources have all been tapped by all the most prominent LLMs. But big companies that have exclusive access to other sources of data may as a result have an important leg up. Elon Musk’s model Grok, for example, has been trained on data from Twitter/X that nobody else can access. Other big LLM companies like OpenAI don’t have their own social media networks, and independent scientists certainly don’t. Again it is unclear how much of an advantage access to that kind of proprietary data will prove to be over time.

b. Pre-training

Pre-training is the first step in actually creating a general LLM “base model” (as opposed to smaller or more specialized models derived from it). It involves teaching the model the basics of language by running the vast datasets through thousands of powerful graphical processing unit (GPU) cards to teach it to predict the next token in a string of text, building in the process a map of associations between different words and concepts. The output of this training is a set of model weights — essentially, a large set of numbers (in the largest models, trillions) representing the strength of associations or “thickness of the lines” between different tokens (corresponding roughly to neurons in the model’s “brain”).

As we have seen, pre-training is yielding diminishing returns, but it remains the most expensive step that uses the vast majority of the compute involved in creating a model.

There’s another technical development that may reduce the barriers to entry to training models. Most base-model training has taken place in specialized server farms with expensive GPUs packed close together because of the need to transfer enormous amounts of data many times between GPUs and very quickly (an ability known as “interconnect”) at each training step. This was seen as so vital that U.S. export controls targeted at China didn’t attempt to restrict compute, but only interconnect, on the assumption that would hobble China’s ability to do AI. But advances are being made in distributed training that allow far-flung computers to accomplish the same tasks. One expert, Nathan Labenz, that distributed training of LLMs as good as recent frontier models “is the kind of thing now that a well-organized but distributed group could probably potentially patch together the resources to do.”

c. Post-training

In the post-training stage, a base model’s abilities in a specific area are shaped and refined through a variety of techniques. Supervised fine-tuning (SFT) can be used to refine the model’s abilities by providing it with a cultivated set of examples in a specialized area — for example, if you want the model to write about finance, health care, or the law, you might fine-tune it with data from those specialties. Another technique is “reinforcement learning from human feedback” (RLHF), in which humans give a model’s outputs thumbs ups and downs to nudge it toward behaving in certain ways and not others. A model can also be trained with synthetic data, feedback from another model, internal model self-critiques, or in some areas from self-play in which a model competes against itself.

Post-training is becoming an part of model building today. Several years ago it was mostly focused on style and safety, but its applications have gotten much broader. Post-training techniques are now used to shape a base model into a variety of derivative models. It could not only be trained to answer questions in a helpful and engaging manner for a chatbot, but also to actively search and retrieve new information (for “retrieval-augmented generation,” or RAG), to carry out tasks (for agents), to include images (for language vision models), or to specialize in answering objective scientific questions (for ).

Experts say that growth in the importance of post-training will likely continue. As Nathan Lambert of the nonprofit Allen AI research institute , “it’s very logical that post training will be the next domain for scaling model compute and performance” — meaning that like post-training scaling while it lasted, ever-larger resources may be needed to stay on the post-training cutting edge. Lambert points out that post-training is “still far cheaper than pretraining,” but that “post-training costs have been growing rapidly” into the tens of millions of dollars.

Still, there are many organizations that can spend tens of millions of dollars, compared to the billions of dollars that many expected model training to eventually cost. In addition, experts Like Labenz that the lower technical difficulty of post-training makes it accessible to many more parties than base model training. “One of the biggest developments has been the recent revelation that reinforcement learning, on top of at least sufficiently powerful base models, really works and actually can be a pretty simple setup that works remarkably well,” he says.

d. Inference

Inference-time compute is the processing that takes place after a user has made a query. When ChatGPT was first released, and for some time afterwards, LLMs did very little of this kind of processing, but the trend has been toward much more. Partly that is a result of reasoning models, which are created in post-training by giving LLMs large numbers of problems where there are objective right and wrong answers, a technique called reinforcement learning from verifiable rewards (RLVR). When training on problems with objective answers, such as in coding, science and math, models can be trained quickly and in great depth without human participation — teaching themselves, essentially. The researchers at Deepseek that one of the emergent (spontaneously emerging) behaviors produced by such training was chain-of-thought reasoning, in which the model explicitly “thinks” step-by-step about the query it has received, explaining its reasoning along the way, and backtracking if necessary, before arriving at the answer. Although this emerges from training on science and math arenas, it appears that it generalizes to other, more subjective domains as well, making the model do better at all kinds of queries, including such things as legal reasoning. Wherever deployed, chain-of-thought reasoning increases the inference-time compute and thus the cost of running a model.

Another development that adds to the costs of inference is a trend toward larger “context windows.” In many ways LLMs are like the protagonist of the movie Memento or a dementia patient who can access a lifetime’s worth of background memory and knowledge, but is unable to form new memories. In answering queries, LLMs always have at hand the world-knowledge that they gained during pre- and post-training, but this knowledge is frozen. In terms of new input — what they can keep in “mind” during a single conversation — they have rather small short-term memories.

The original ChatGPT is to have had a context window of 8,192 tokens. Increasing context windows is expensive because in preparing an answer, an LLM must compare every token in the window to every other token. That means that the amount of processing compute needed rises roughly by the square of the number of tokens in memory. Nevertheless, some models now have relatively enormous context windows, such as with 10 million tokens. This is in part due to clever innovations that are making it possible to reduce the compute needed to work with a window that big. But it’s still expensive. And even as inference becomes more compute-intensive, there is a strong demand for faster inference times, which is desirable for those who are want to use them for coding or for real-time applications like audio chat and live translation.

When inference costs are low, creating an LLM is like creating a railroad — it involves enormous upfront capital costs to build it, but then once built, relatively low marginal costs to run it. To the extent that inference costs grow, that raises ongoing operating costs. Providers are increasingly vying to offer large context windows and fast inference speeds — competing demands that are fast becoming major vectors of competition among LLM providers and reward computing power, scale, and centralization.

A diffusion of training ability

Overall, LLM research is in many ways “spreading outward” compared to its initial Manhattan Project-like character, and becoming more broadly accessible, giving us reason to hope that — especially with active measures by policymakers — LLMs may just not become the latest center of growing corporate power over individuals. The picture is complicated and fast-changing, however. It’s hard to know what direction progress will come from and how accessible the data, compute, interconnect, and other resources required for such progress will be. But that is the thing to watch — and the thing that policymakers should be actively trying to affect.

In the next installment of this series, I’ll look at another crucial factor in determining the future democratic character of LLMs: the state of open source LLM research and models.